目录

-

项目背景与分析 -

数据读入与检查 -

数据预处理 -

数据校正 -

缺失值填充 -

数据创建 -

数据转换 -

数据清洗 -

数据划分 -

探索性分析 -

建模分析 -

模型评估与优化 -

交叉验证 -

超参数调整 -

特征选择 -

模型验证 -

改进与总结

01

项目背景与分析

02

数据读取与检查

#导入相关库

import sys

print("Python version: {}". format(sys.version))

import pandas as pd

print("pandas version: {}". format(pd.__version__))

import matplotlib

print("matplotlib version: {}". format(matplotlib.__version__))

import numpy as np

print("NumPy version: {}". format(np.__version__))

import scipy as sp

print("SciPy version: {}". format(sp.__version__))

import IPython

from IPython import display

print("IPython version: {}". format(IPython.__version__))

import sklearn

print("scikit-learn version: {}". format(sklearn.__version__))

import random

import time

#忽略警号

import warnings

warnings.filterwarnings('ignore')

print('-'*25)

# 将三个数据文件放入主目录下

from subprocess import check_output

print(check_output(["ls"]).decode("utf8"))

#导入建模相关库

from sklearn import svm, tree, linear_model, neighbors, naive_bayes, ensemble, discriminant_analysis, gaussian_process

from xgboost import XGBClassifier

from sklearn.preprocessing import OneHotEncoder, LabelEncoder

from sklearn import feature_selection

from sklearn import model_selection

from sklearn import metrics

import matplotlib as mpl

import matplotlib.pyplot as plt

import matplotlib.pylab as pylab

import seaborn as sns

from pandas.tools.plotting import scatter_matrix

#可视化相关设置

%matplotlib inline

mpl.style.use('ggplot')

sns.set_style('white')

pylab.rcParams['figure.figsize'] = 12,8

data_raw = pd.read_csv('train.csv')

data_val = pd.read_csv('test.csv')

data1 = data_raw.copy(deep = True)

data_cleaner = [data1, data_val]

print (data_raw.info())

data_raw.sample(10)<class 'pandas.core.frame.DataFrame'>

RangeIndex: 891 entries, 0 to 890

Data columns (total 12 columns):

PassengerId 891 non-null int64

Survived 891 non-null int64

Pclass 891 non-null int64

Name 891 non-null object

Sex 891 non-null object

Age 714 non-null float64

SibSp 891 non-null int64

Parch 891 non-null int64

Ticket 891 non-null object

Fare 891 non-null float64

Cabin 204 non-null object

Embarked 889 non-null object

dtypes: float64(2), int64(5), object(5)

memory usage: 83.6+ KB

None

-

存活变量是我们的结果或因变量。这是一个二进制标称数据类型的1幸存,0没有生存。所有其他变量都是潜在的预测变量或独立变量。重要的是要注意,更多的预测变量并并不会形成更好的模型,而是正确的变量才会。 -

乘客ID和票证变量被假定为随机唯一标识符,对结果变量没有影响。因此,他们将被排除在分析之外。 -

Pclass变量是票券类的序数数据,是社会经济地位(SES)的代表,代表1 =上层,2=中产阶级,3 =下层。 -

Name变量是一个标称数据类型。用于提取特征中,可以从标题、家庭大小、姓氏中获得性别,如SES可以从医生或硕士来判断。因为这些变量已经存在,我们将利用它来查看title(如master)是否会产生影响。 -

性别和装载变量是一种名义数据类型。它们将被转换为数学计算的哑变量。 -

年龄和费用变量是连续的定量数据类型。 -

SIBSP代表相关上船的兄弟姐妹/配偶的数量,而PARCH代表上传的父母/子女的数量。两者都是离散的定量数据类型。这可以特征工程创建一个关于家庭大小的变量。 -

舱室变量是一个标称数据类型,可用于特征工程中描述事故发生时船舶上的大致位置和从甲板上的船位。然而,由于有许多空值,它不增加值,因此被排除在分析之外。

03

数据预处理

-

校正(Correcting) -

填充(Completing) -

创建(Creating) -

转换(Converting)

数据校正

缺失值填充

数据创建

数据转换

print('Train columns with null values:\n', data1.isnull().sum())

print("-"*10)

print('Test/Validation columns with null values:\n', data_val.isnull().sum())

print("-"*10)

data_raw.describe(include = 'all')

开始清洗

-

pandas.DataFrame -

pandas.DataFrame.info -

pandas.DataFrame.describe -

Indexing and Selecting Data -

pandas.isnull -

pandas.DataFrame.sum -

pandas.DataFrame.mode -

pandas.DataFrame.copy -

pandas.DataFrame.fillna -

pandas.DataFrame.drop -

pandas.Series.value_counts -

pandas.DataFrame.loc

###缺失值处理

for dataset in data_cleaner:

#用中位数填充

dataset['Age'].fillna(dataset['Age'].median(), inplace = True)

dataset['Embarked'].fillna(dataset['Embarked'].mode()[0], inplace = True)

dataset['Fare'].fillna(dataset['Fare'].median(), inplace = True)

#删除部分数据

drop_column = ['PassengerId','Cabin', 'Ticket']

data1.drop(drop_column, axis=1, inplace = True)

print(data1.isnull().sum())

print("-"*10)

print(data_val.isnull().sum())

label = LabelEncoder()

for dataset in data_cleaner:

dataset['Sex_Code'] = label.fit_transform(dataset['Sex'])

dataset['Embarked_Code'] = label.fit_transform(dataset['Embarked'])

dataset['Title_Code'] = label.fit_transform(dataset['Title'])

dataset['AgeBin_Code'] = label.fit_transform(dataset['AgeBin'])

dataset['FareBin_Code'] = label.fit_transform(dataset['FareBin'])

Target = ['Survived']

data1_x = ['Sex','Pclass', 'Embarked', 'Title','SibSp', 'Parch', 'Age', 'Fare', 'FamilySize', 'IsAlone'] #pretty name/values for charts

data1_x_calc = ['Sex_Code','Pclass', 'Embarked_Code', 'Title_Code','SibSp', 'Parch', 'Age', 'Fare'] #coded for algorithm calculation

data1_xy = Target + data1_x

print('Original X Y: ', data1_xy, '\n')

#为原始特征定义x变量以删除连续变量

data1_x_bin = ['Sex_Code','Pclass', 'Embarked_Code', 'Title_Code', 'FamilySize', 'AgeBin_Code', 'FareBin_Code']

data1_xy_bin = Target + data1_x_bin

print('Bin X Y: ', data1_xy_bin, '\n')

data1_dummy = pd.get_dummies(data1[data1_x])

data1_x_dummy = data1_dummy.columns.tolist()

data1_xy_dummy = Target + data1_x_dummy

print('Dummy X Y: ', data1_xy_dummy, '\n')

data1_dummy.head()

print('Train columns with null values: \n', data1.isnull().sum())

print("-"*10)

print (data1.info())

print("-"*10)

print('Test/Validation columns with null values: \n', data_val.isnull().sum())

print("-"*10)

print (data_val.info())

print("-"*10)

data_raw.describe(include = 'all')

划分测试集与训练集

train1_x, test1_x, train1_y, test1_y = model_selection.train_test_split(data1[data1_x_calc], data1[Target], random_state = 0)

train1_x_bin, test1_x_bin, train1_y_bin, test1_y_bin = model_selection.train_test_split(data1[data1_x_bin], data1[Target] , random_state = 0)

train1_x_dummy, test1_x_dummy, train1_y_dummy, test1_y_dummy = model_selection.train_test_split(data1_dummy[data1_x_dummy], data1[Target], random_state = 0)

print("Data1 Shape: {}".format(data1.shape))

print("Train1 Shape: {}".format(train1_x.shape))

print("Test1 Shape: {}".format(test1_x.shape))

train1_x_bin.head()

04

探索性分析

for x in data1_x:

if data1[x].dtype != 'float64' :

print('Survival Correlation by:', x)

print(data1[[x, Target[0]]].groupby(x, as_index=False).mean())

print('-'*10, '\n')

print(pd.crosstab(data1['Title'],data1[Target[0]]))

我们知道阶级对生存很重要,现在让我们比较不同阶级的特征。

由图可看出:1)船舱等级越高,票价越贵。2)船舱等级高的人的年龄相对较大。3)船舱等级越高,家庭出游人数越少。死亡比例与船舱等级关系不大,另外我们知道性别对生存很重要,现在我们来比较一下性别和第二个特征。

可以看到女性的存活比例大于男性,且C甲板、独自出行的女士存活率较高,接着观察更多比较。

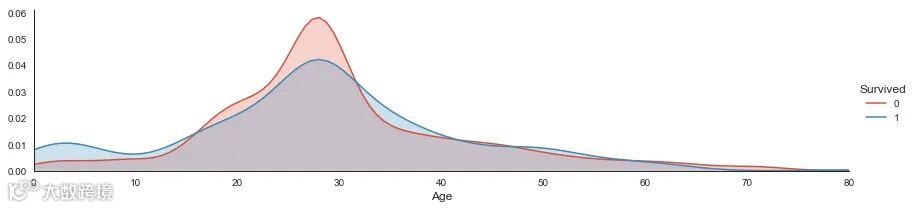

接下来绘制幸存或未幸存乘客的年龄分布。

绘制幸存者性别年龄等直方图

最后对整个数据集进行可视化

05

建模分析

-

EM方法 -

广义线性模型(GLM) -

朴素贝叶斯 -

K近邻 -

支持向量机(SVM) -

决策树

06

模型评估

Coin Flip Model Accuracy: 49.49%

Coin Flip Model Accuracy w/SciKit: 49.49%

对结果进行汇总。

Survival Decision Tree w/Female Node:

Sex Pclass Embarked FareBin

female 1 C (14.454, 31.0] 0.666667

(31.0, 512.329] 1.000000

Q (31.0, 512.329] 1.000000

S (14.454, 31.0] 1.000000

(31.0, 512.329] 0.955556

2 C (7.91, 14.454] 1.000000

(14.454, 31.0] 1.000000

(31.0, 512.329] 1.000000

Q (7.91, 14.454] 1.000000

S (7.91, 14.454] 0.875000

(14.454, 31.0] 0.916667

(31.0, 512.329] 1.000000

3 C (-0.001, 7.91] 1.000000

(7.91, 14.454] 0.428571

(14.454, 31.0] 0.666667

Q (-0.001, 7.91] 0.750000

(7.91, 14.454] 0.500000

(14.454, 31.0] 0.714286

S (-0.001, 7.91] 0.533333

(7.91, 14.454] 0.448276

(14.454, 31.0] 0.357143

(31.0, 512.329] 0.125000

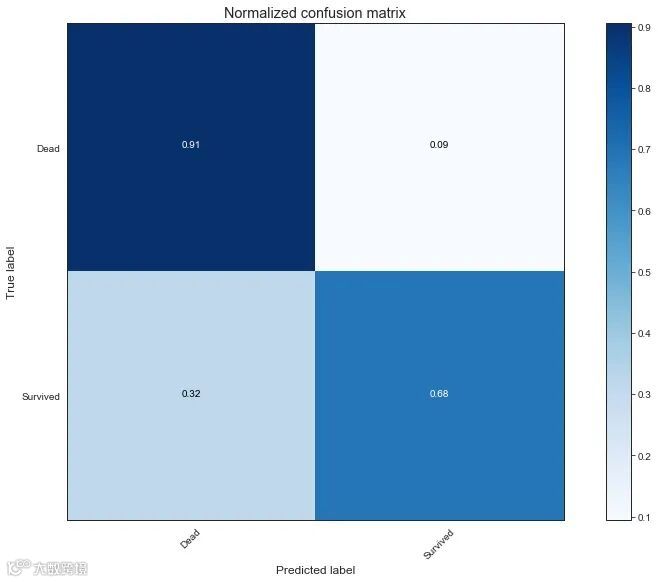

现在使用决策树进行建模并查看相关指标。

Decision Tree Model Accuracy/Precision Score: 82.04%

precision recall f1-score support

0 0.82 0.91 0.86 549

1 0.82 0.68 0.75 342

accuracy 0.82 891

macro avg 0.82 0.79 0.80 891

weighted avg 0.82 0.82 0.82 891

交叉验证

超参数调整

BEFORE DT Parameters: {'ccp_alpha': 0.0, 'class_weight': None, 'criterion': 'gini', 'max_depth': None, 'max_features': None, 'max_leaf_nodes': None, 'min_impurity_decrease': 0.0, 'min_impurity_split': None, 'min_samples_leaf': 1, 'min_samples_split': 2, 'min_weight_fraction_leaf': 0.0, 'presort': 'deprecated', 'random_state': 0, 'splitter': 'best'}

BEFORE DT Training w/bin score mean: 82.09

BEFORE DT Test w/bin score mean: 82.09

BEFORE DT Test w/bin score 3*std: +/- 5.57

----------

AFTER DT Parameters: {'criterion': 'gini', 'max_depth': 4, 'random_state': 0}

AFTER DT Training w/bin score mean: 87.40

AFTER DT Test w/bin score mean: 87.40

AFTER DT Test w/bin score 3*std: +/- 5.00

特征选择

BEFORE DT RFE Training Shape Old: (891, 7)

BEFORE DT RFE Training Columns Old: ['Sex_Code' 'Pclass' 'Embarked_Code' 'Title_Code' 'FamilySize'

'AgeBin_Code' 'FareBin_Code']

BEFORE DT RFE Training w/bin score mean: 82.09

BEFORE DT RFE Test w/bin score mean: 82.09

BEFORE DT RFE Test w/bin score 3*std: +/- 5.57

----------

AFTER DT RFE Training Shape New: (891, 6)

AFTER DT RFE Training Columns New: ['Sex_Code' 'Pclass' 'Title_Code' 'FamilySize' 'AgeBin_Code'

'FareBin_Code']

AFTER DT RFE Training w/bin score mean: 83.06

AFTER DT RFE Test w/bin score mean: 83.06

AFTER DT RFE Test w/bin score 3*std: +/- 6.22

----------

AFTER DT RFE Tuned Parameters: {'criterion': 'gini', 'max_depth': 4, 'random_state': 0}

AFTER DT RFE Tuned Training w/bin score mean: 87.34

AFTER DT RFE Tuned Test w/bin score mean: 87.34

AFTER DT RFE Tuned Test w/bin score 3*std: +/- 6.21

----------

07

模型验证

接下来是通过投票选择模型

Hard Voting Training w/bin score mean: 86.59

Hard Voting Test w/bin score mean: 82.39

Hard Voting Test w/bin score 3*std: +/- 4.95

----------

Soft Voting Training w/bin score mean: 87.15

Soft Voting Test w/bin score mean: 82.35

Soft Voting Test w/bin score 3*std: +/- 4.85

----------

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 418 entries, 0 to 417

Data columns (total 21 columns):

PassengerId 418 non-null int64

Pclass 418 non-null int64

Name 418 non-null object

Sex 418 non-null object

Age 418 non-null float64

SibSp 418 non-null int64

Parch 418 non-null int64

Ticket 418 non-null object

Fare 418 non-null float64

Cabin 91 non-null object

Embarked 418 non-null object

FamilySize 418 non-null int64

IsAlone 418 non-null int64

Title 418 non-null object

FareBin 418 non-null category

AgeBin 418 non-null category

Sex_Code 418 non-null int64

Embarked_Code 418 non-null int64

Title_Code 418 non-null int64

AgeBin_Code 418 non-null int64

FareBin_Code 418 non-null int64

dtypes: category(2), float64(2), int64(11), object(6)

memory usage: 63.1+ KB

None

----------

Validation Data Distribution:

0 0.633971

1 0.366029

Name: Survived, dtype: float64

08

总结

📚推荐阅读 READ MORE

(点击下方图片阅读)

豆瓣9.2分!17万条弹幕告诉你《沉默的真相》凭什么口碑高开暴走!

📌CDA课程咨询