作者:Paco Nathan 翻译:笪洁琼 校对:和中华

本文简要介绍了如何使用spaCy和Python中的相关库进行自然语言处理(有时称为“文本分析”)。以及一些目前最新的相关应用。

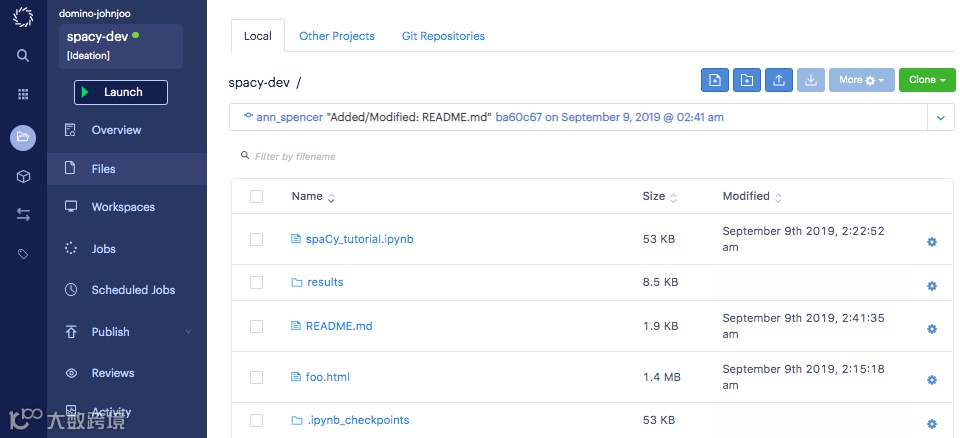

说明页面

https://support.dominodatalab.com/hc/en-us/articles/115000392643-Environment-management

import spacynlp = spacy.load("en_core_web_sm")

text = "The rain in Spain falls mainly on the plain."doc = nlp(text)for token in doc:print(token.text, token.lemma_, token.pos_, token.is_stop)

The the DET Truerain rain NOUN Falsein in ADP TrueSpain Spain PROPN Falsefalls fall VERB Falsemainly mainly ADV Falseon on ADP Truethe the DET Trueplain plain NOUN False. . PUNCT False

import pandas as pdcols = ("text", "lemma", "POS", "explain", "stopword")rows = []for t in doc:row = [t.text, t.lemma_, t.pos_, spacy.explain(t.pos_), t.is_stop]rows.append(row)df = pd.DataFrame(rows, columns=cols)df

原始文本

词形(lemma)引理——这个词的词根形式

词性(part-of-speech)

是否是停用词的标志,比如一个可能会被过滤的常用词

from spacy import displacydisplacy.render(doc, style="dep")

text = "We were all out at the zoo one day, I was doing some acting, walking on the railing of the gorilla exhibit. I fell in. Everyone screamed and Tommy jumped in after me, forgetting that he had blueberries in his front pocket. The gorillas just went wild."doc = nlp(text)for sent in doc.sents:print(">", sent)

We were all out at the zoo one day, I was doing some acting, walking on the railing of the gorilla exhibit.I fell in.Everyone screamed and Tommy jumped in after me, forgetting that he had blueberries in his front pocket.The gorillas just went wild.

for sent in doc.sents:print(">", sent.start, sent.end)

doc[48:54]The gorillas just went wild.

token = doc[51]print(token.text, token.lemma_, token.pos_)went go VERB

import sysimport warningswarnings.filterwarnings("ignore")

from bs4 import BeautifulSoupimport requestsimport tracebackdef get_text (url):buf = []try:soup = BeautifulSoup(requests.get(url).text, "html.parser")for p in soup.find_all("p"):buf.append(p.get_text())return "\n".join(buf)except:print(traceback.format_exc())sys.exit(-1)

https://opensource.org/licenses/

lic = {}lic["mit"] = nlp(get_text("https://opensource.org/licenses/MIT"))lic["asl"] = nlp(get_text("https://opensource.org/licenses/Apache-2.0"))lic["bsd"] = nlp(get_text("https://opensource.org/licenses/BSD-3-Clause"))for sent in lic["bsd"].sents: print(">", sent)

> SPDX short identifier: BSD-3-Clause> Note: This license has also been called the "New BSD License" or "Modified BSD License"> See also the 2-clause BSD License.…

pairs = [["mit", "asl"],["asl", "bsd"],["bsd", "mit"]]for a, b in pairs:print(a, b, lic[a].similarity(lic[b]))

mit asl 0.9482039305669306asl bsd 0.9391555350757145bsd mit 0.9895838089575453

现在让我们深入了解一下spaCy中的NLU特性。假设我们要解析有一个文档,从纯语法的角度来看,我们可以提取名词块(https://spacy.io/usage/linguistic-features#noun-chunks),即每个名词短语:

text = "Steve Jobs and Steve Wozniak incorporated Apple Computer on January 3, 1977, in Cupertino, California."doc = nlp(text)for chunk in doc.noun_chunks:print(chunk.text)

Steve JobsSteve WozniakApple ComputerJanuaryCupertinoCalifornia

for ent in doc.ents:print(ent.text, ent.label_)

displacy.render(doc, style="ent")

import nltknltk.download("wordnet")[nltk_data] Downloading package wordnet to /home/ceteri/nltk_data...[nltk_data] Package wordnet is already up-to-date!True

from spacy_wordnet.wordnet_annotator import WordnetAnnotatorprint("before", nlp.pipe_names)if "WordnetAnnotator" not in nlp.pipe_names: nlp.add_pipe(WordnetAnnotator(nlp.lang), after="tagger")print("after", nlp.pipe_names)before ['tagger', 'parser', 'ner']after ['tagger', 'WordnetAnnotator', 'parser', 'ner']

token = nlp("withdraw")[0]token._.wordnet.synsets()

[Synset('withdraw.v.01'),Synset('retire.v.02'),Synset('disengage.v.01'),Synset('recall.v.07'),Synset('swallow.v.05'),Synset('seclude.v.01'),Synset('adjourn.v.02'),Synset('bow_out.v.02'),Synset('withdraw.v.09'),Synset('retire.v.08'),Synset('retreat.v.04'),Synset('remove.v.01')]

token._.wordnet.lemmas()[Lemma('withdraw.v.01.withdraw'),Lemma('withdraw.v.01.retreat'),Lemma('withdraw.v.01.pull_away'),Lemma('withdraw.v.01.draw_back'),Lemma('withdraw.v.01.recede'),Lemma('withdraw.v.01.pull_back'),Lemma('withdraw.v.01.retire'),…

token._.wordnet.wordnet_domains()['astronomy','school','telegraphy','industry','psychology','ethnology','ethnology','administration','school','finance','economy','exchange','banking','commerce','medicine','ethnology','university',…

domains = ["finance", "banking"]sentence = nlp("I want to withdraw 5,000 euros.")enriched_sent = []for token in sentence:# get synsets within the desired domainssynsets = token._.wordnet.wordnet_synsets_for_domain(domains)if synsets:lemmas_for_synset = []for s in synsets:# get synset variants and add to the enriched sentencelemmas_for_synset.extend(s.lemma_names())enriched_sent.append("({})".format("|".join(set(lemmas_for_synset))))else:enriched_sent.append(token.text)print(" ".join(enriched_sent))

I (require|want|need) to (draw_off|withdraw|draw|take_out) 5,000 euros .

import scattertext as stif "merge_entities" not in nlp.pipe_names:nlp.add_pipe(nlp.create_pipe("merge_entities"))if "merge_noun_chunks" not in nlp.pipe_names:nlp.add_pipe(nlp.create_pipe("merge_noun_chunks"))convention_df = st.SampleCorpora.ConventionData2012.get_data()corpus = st.CorpusFromPandas(convention_df,category_col="party",text_col="text",nlp=nlp).build()

html = st.produce_scattertext_explorer(corpus,category="democrat",category_name="Democratic",not_category_name="Republican",width_in_pixels=1000,metadata=convention_df["speaker"])

from IPython.display import IFramefile_name = "foo.html"with open(file_name, "wb") as f:f.write(html.encode("utf-8"))IFrame(src=file_name, width = 1200, height=700)

总结

值得注意的是,随着谷歌开始赢得国际语言翻译比赛,用于自然语言的的机器学习自2000年中期得到了很大的发展。2017年至2018年期间,随着深度学习的诸多成功,这些方法开始超越以前的机器学习模型,出现了另一个重大变化。

例如,经Allen AI研究提出的看到ELMo 语言嵌入模型, 随后是谷歌的BERT,(https://ai.googleblog.com/2018/11/open-sourcing-bert-state-of-art-pre.html),以及最近由

END

转自: 数据派THU 公众号;

版权声明:本号内容部分来自互联网,转载请注明原文链接和作者,如有侵权或出处有误请和我们联系。

商务合作|约稿 请加qq:365242293

更多相关知识请回复:“ 月光宝盒 ”;

数据分析(ID : ecshujufenxi )互联网科技与数据圈自己的微信,也是WeMedia自媒体联盟成员之一,WeMedia联盟覆盖5000万人群。